Advertisements

Recently, NVIDIA captured significant attention within the industry with the launch of its generative AI service designed for 3D modeling. Whereas earlier generative AI applications primarily focused on creating content in two dimensions—text, images, and videos—NVIDIA is now pioneering the use of generative AI to assist companies in building 3D assets. This innovation is poised to accelerate the development of digital twins and simulation industries, thereby enhancing the application of AI in the physical world.

Targeting Industrial Needs with "CUDA Native"

NVIDIA, a global leader in accelerated computing, is hinting at new directions in AI's industrial applications. During two fireside chats at the 2024 SIGGRAPH conference, founder and CEO Jensen Huang shared insights on how generative AI and accelerated computing can transform industries such as manufacturing through visualization. Alongside these discussions, NVIDIA introduced a new set of NIM microservices.

The SIGGRAPH conference is renowned for discussing the latest innovations in computer graphics. At this event, NVIDIA unveiled generative AI models and NIM microservices that are compatible with OpenUSD, geometry, physics, and materials. OpenUSD is an open-source software that facilitates data interchange within 3D scenes, gradually setting the standard across numerous sectors including 3D visualization, architecture, design, and manufacturing.

These models and services equip developers to expedite application development across industries including manufacturing, automotive, and robotics.

During the discussions, Huang emphasized the importance of creating digital twins and virtual worlds. He stated that industries are leveraging large-scale digital twins, akin to entire cities, to boost efficiency and reduce costs. “For example, before deploying next-generation humanoid robots, AI can train within these virtual realms,” he elaborated.

Why has Huang prioritized the discourse around industrial visualization, virtual worlds, and digital twins? What prompted NVIDIA to introduce its new NIM microservices within the CUDA ecosystem at this juncture?

Rev Lebaredian, NVIDIA's Vice President of Omniverse and Simulation Technology, remarked that the wave of generative AI catering to heavy industries has officially arrived. Reports indicate that generative AI is advancing from simplistic applications to intricate production processes. This technical ecosystem heralds an expedited transition.

“Until recently, the primary users of the digital realm were within creative industries; with the latest enhancements and accessibility of NVIDIA NIM microservices for OpenUSD, any sector can now create physics-based virtual worlds and digital twins, preparing for this new wave of AI-driven technologies,” Lebaredian explained.

In the automotive sector, domestic firms are heavily engaged in the push for digital twins. A representative from a major Chinese automotive manufacturer remarked, “Tesla is soon set to release version FSD 12.5 and is actively promoting the FSD rollout in China.” Emphasizing that Tesla considers simulation a strategic priority, the source noted that they are also venturing into the metaverse to resolve issues surrounding autonomous driving data loops. Previously, the collection of “ghost probe” data was both challenging and costly; however, it is now feasible for companies to address rare scenarios through metaverse simulation environments.

Within the robotics industry, a company specializing in electric inspection robots is training AI in simulated environments, enabling robots to navigate the complexities of power plant infrastructure, plan movement paths, and analyze readings from thousands of meters across different devices.

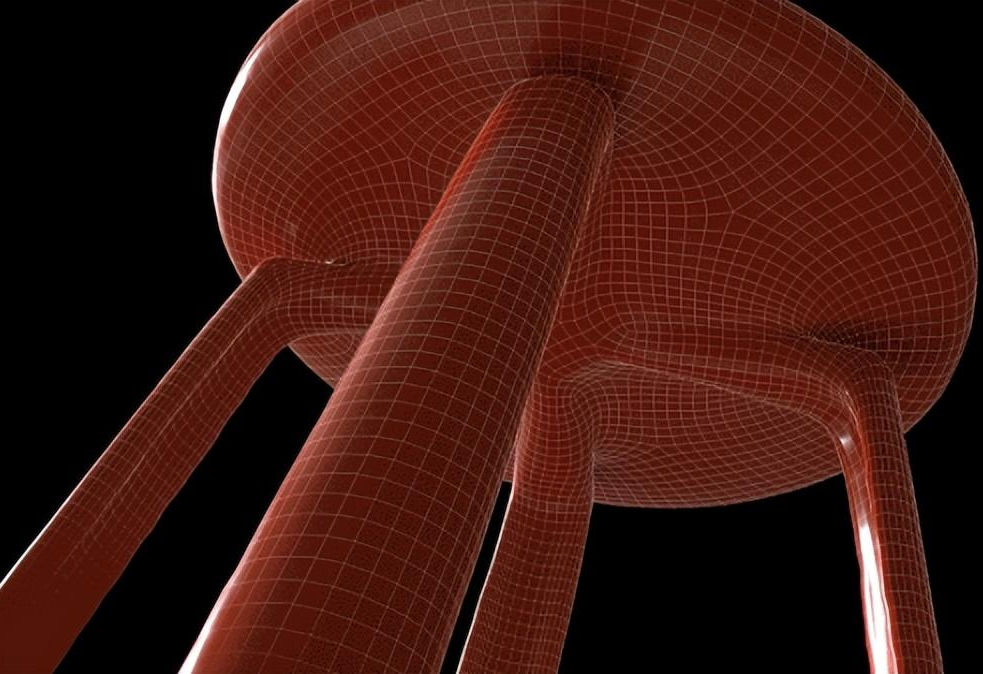

Architectural design, a multifaceted and time-consuming endeavor, fundamentally relies on 3D models—essential deliverables in the field. The intricate reconstruction of models representing complex geometries and unconventional structures poses a considerable challenge. Currently, several design firms are collaborating with AI organizations to create models utilizing merely a few photographs, sketches, or textual descriptions. They’re also able to apply varied materials to refine designs.

In the metallurgical sector, metallographic analysis—a method for assessing internal defects and structures of material slices through microscopy to understand inherent material properties—has historically been inefficient and heavily reliant on human expertise. Nowadays, many steel enterprises share a common aspiration: to utilize previous knowledge repositories, training specialized AI to perform comprehensive material analysis.

NVIDIA’s novel NIM microservices allow application companies to build upon existing services without starting from scratch, integrating their data efficiently to realize applications. As such, some firms have characterized this initiative as “CUDA native.”

As generative AI transitions from peripheral scenarios to more central applications, Huang proclaimed, “Everyone will possess their own AI assistant.” The integration of AI with imaging technologies is deepening, and “virtually every industry will feel the impact of this technology—whether it is in scientific calculations that yield improved weather predictions with lower energy consumption, collaborating with creators to generate images, or establishing virtual scenarios for industrial visualization,” Huang asserted. He also pointed out, “Generative AI is set to revolutionize areas such as robotics and driverless vehicles.”

New NIM Microservices: Unleashing Potential

At the core of these industry applications lies the reliance on 3D modeling and simulation technologies.

Historically, constructing 3D content and scenes has posed significant challenges, involving complex workflows of modeling, texturing, animation, lighting, and rendering.

Over the past few decades, animation studios, visual effects companies, and game creators have sought to enhance interoperability between various tools in their workflows, yet with limited success. Transferring data from one location to another proved cumbersome, leading studios to develop convoluted workflows to manage data interoperability.

Moreover, traditional 3D production processes have been linear in collaboration, necessitating multiple formats and edits across various departments, which is time-consuming and resource-intensive.

OpenUSD, an open-source universal 3D data exchange framework, was established in 2023 by NVIDIA, Pixar, Apple, and others to facilitate the seamless construction of virtual worlds via compatible software tools and data types, characterized by high interoperability and compatibility—effectively addressing several challenges encountered during 3D scene creation.

OpenUSD serves as the foundation for NVIDIA’s Omniverse platform. In conversations, Huang referred to OpenUSD as the first format to merge multimodal expressions from nearly all tools. Ideally, over time, individuals could introduce almost any format into the fold, enabling widespread collaboration and the permanent preservation of content. Generative AI is expected to further enhance the simulation realism within Omniverse.

NVIDIA’s introduction of the NIM microservices specifically designed for OpenUSD marks the world’s first generative AI model tailored for OpenUSD development. This service integrates generative AI capabilities into the USD workflow via NIM microservices, substantially lowering the barrier for users to leverage OpenUSD. Additionally, NVIDIA also unveiled a series of new USD connectors applicable to robotic data formats and Apple Vision Pro streaming.

Currently, three NIM microservices have been released: First is the USD Code NIM microservice, capable of answering general OpenUSD questions and generating Python code based on textual prompts.

The second is the USD Search NIM microservice, which enables developers to conduct searches across vast databases of OpenUSD, 3D models, and images using natural language or image inputs, greatly enhancing the speed of material retrieval and processing within companies.

The third is the USD Validate NIM microservice, which checks the compatibility of uploaded files with OpenUSD release versions and generates entirely NVIDIA Omniverse Cloud API-driven RTX rendered path-traced images.

In addition to NVIDIA’s native NIM microservices, ecosystem partners are actively building a variety of popular AI models leveraging these microservices, providing users with optimized inference.

Renowned global creative content platform Shutterstock has launched a new text-to-3D service based on NVIDIA’s latest Edify visual generation model, enabling the creation of 3D prototypes or enriching virtual environments.

For instance, creating accurate lighting that reflects within a virtual scene used to be a complicated endeavor. Previously, creators had to utilize expensive 360-degree camera equipment, physically traveling to shoot scenes from scratch or scouring enormous libraries for similar content.

Now, with 3D generation services, users simply need to describe the environment they need using text or images, yielding high dynamic range panoramic images (360 HDRi) at resolutions up to 16K. Furthermore, these scenes and components can be seamlessly switched, allowing for a sports car to appear in diverse backdrops, such as deserts, tropical beaches, or winding mountain roads.

Beyond lighting creation, users can quickly incorporate various rendering materials like concrete, wood, or leather into their 3D assets—these AI-generated assets can now also be edited and provided in various popular file formats.

NVIDIA’s Edify AI model bolstered Getty Images' capabilities, enabling artists to execute precise control over the composition and style of their images. For example, one could place a red beach ball on a picture of a pristine coral reef while retaining precise compositional elements. Moreover, creators can fine-tune existing models using company-specific data to generate images that align with particular branding styles.

These model microservices and tools are significantly accelerating brand creation of 3D assets, thus making the development of digital twins more ubiquitous and accessible.

Early Adopters Are Experimenting

As the creation of 3D content and assets becomes more user-friendly and precise, sectors such as industrial manufacturing, autonomous driving, engineering, and robotics are beginning to reap the benefits of generative AI technologies. Particularly in manufacturing and advertising, a host of pioneering enterprises are actively utilizing the NVIDIA Omniverse platform to hasten the implementation of digital twins and simulations.

Coca-Cola is the first brand to deploy the generative AI capabilities of Omniverse and NIM microservices within its marketing endeavors. In a demonstration video, it needed only to input the natural language request, “Build me a table with tacos and salsa, bathed in morning light.”

Swiftly, the USD Search NIM microservice searched the extensive 3D asset library for suitable models, retrieving them through API calls, while USD Code NIM generated the Python code necessary to combine these models into a scene, thereby significantly enhancing their creative capabilities. With generative AI, Coca-Cola can customize its brand imagery across over 100 global markets, achieving localized marketing strategies.

As Coca-Cola's advertising partner, WPP introduced its smart marketing operating system, capitalizing on the Omniverse development platform and OpenUSD to streamline and automate the creation of multilingual text, images, and videos—thus simplifying the material creation processes for advertisers and marketers. By leveraging generative AI to serve its clients, WPP has turned innovative ideas into tangible realities.

As WPP’s Chief Technology Officer articulated, “The beauty of these innovations lies in their high compatibility with our work processes, fully leveraging open standards. Not only does this accelerate future work, but it also solidifies and expands our previous investments in standards like OpenUSD. Through the implementation of NVIDIA NIM microservices and NVIDIA Omniverse, we can launch creative new production tools in partnership with clients like Coca-Cola at unprecedented speeds.”

Foxconn, the world's largest consumer electronics contract manufacturer, has created a virtual digital twin factory for a new facility in Mexico, allowing engineers to define processes and train robots within a virtual environment—enhancing automation levels and production efficiency while saving time, cost, and energy.

Utilizing the Omniverse platform, Foxconn integrated all 3D CAD components into a single virtual factory, employing NVIDIA Isaac Sim—developed on Omniverse and OpenUSD—for scalable robot simulation to train robots, achieving physics-level accuracy and realistic visual presentations for its digital twin.

In addition to Foxconn, electronic manufacturers such as Delta Electronics, MediaTek, and Pegatron are embracing NVIDIA AI and Omniverse to construct their factory digital twins.

Meanwhile, XPeng Motors has utilized the Omniverse platform in the design process of its MPV model, the XPeng X9, transitioning their vehicle development workflow into a virtual space to circumvent bottlenecks faced with traditional workflows.

For instance, the Omniverse platform’s strong interoperability eliminates the need for convoluted conversions of files and data used for industrial modeling, rendering, and 3D effects, accelerating communication and collaboration within XPeng's design team. Furthermore, through real-time rendering and ray tracing capabilities, XPeng was able to visualize changes in car colors and interiors instantly, making the virtual effects more realistic, aligning with user demands, and enhancing overall product experience.

In the past two years, the explosive proliferation of generative AI has drawn significant attention to applications within consumer-facing and collaborative sectors; however, it appears the physical world is on the verge of a new wave of breakthroughs and opportunities.

Popular Posts

December 6, 2024

December 27, 2024

January 9, 2025

November 15, 2024

December 24, 2024

December 8, 2024

October 27, 2024

November 30, 2024

November 23, 2024

October 17, 2024

Post Your Comment